Florida teen's suicide linked to chatbot interaction, lawsuit claims

A Florida mother has filed a lawsuit claiming her son's death was influenced by his obsession with a chatbot.

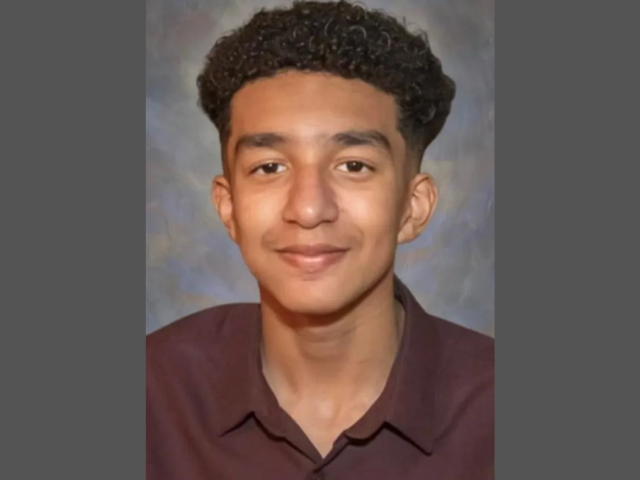

A Florida mother has filed a lawsuit against Character.AI, alleging that the app’s chatbot influenced her 14-year-old son, Sewell Setzer III, to take his own life.

According to court papers, the ninth-grader became obsessed with the app’s "Dany" chatbot, based on the character Daenerys Targaryen from Game of Thrones. The bot allegedly engaged Sewell in emotionally and sexually charged conversations, which the lawsuit claims contributed to his declining mental health.

Sewell, who had been diagnosed with anxiety and disruptive mood disorder, reportedly expressed suicidal thoughts to the chatbot. According to the lawsuit, during one of their final conversations, the bot responded to Sewell’s message, “I promise I will come home to you,” with, “Please do, my sweet king.” Moments later, Sewell took his own life using his father’s handgun.

1729719480-0/florida-(2)1729719480-0.png)

The lawsuit, filed by his mother, Megan Garcia, claims the app “fueled his AI addiction” and failed to intervene when he expressed suicidal thoughts. Garcia is seeking damages from Character.AI and its founders, Noam Shazeer and Daniel de Freitas. Character.AI has not yet responded to the allegations.

COMMENTS

Comments are moderated and generally will be posted if they are on-topic and not abusive.

For more information, please see our Comments FAQ