The defence department is designing robotic fighter jets that would fly into combat alongside manned aircraft. It has tested missiles that can decide what to attack, and it has built ships that can hunt for enemy submarines, stalking those it finds over thousands of miles, without any help from humans.

Defence officials say the weapons are needed for the United States to maintain its military edge over China, Russia and other rivals, who are also pouring money into similar research. The Pentagon’s latest budget earmarked $18 billion to be spent over three years on automated weapon systems technologies.

Stephen Hawking opens British artificial intelligence hub

“China and Russia are developing battle networks that are as good as our own. They can see as far as ours can see; they can throw guided munitions as far as we can,” said Robert O Work, the deputy defence secretary, who has been a driving force for the development of autonomous weapons. “What we want to do is just make sure that we would be able to win as quickly as we have been able to do in the past.”

The AI technology is enabling the Pentagon to restructure the positions of men and machines on the battlefield the same way it is transforming ordinary life with computers that can see, hear and speak and cars that can drive themselves.

The new weapons would offer speed and precision unmatched by any human while reducing the cost and number of human personnel exposed to potential death and dismemberment in battle. "The challenge for the Pentagon is to ensure that the weapons are reliable partners for humans and not potential threats to them," Work added.

'Siri, catch market cheats': Wall Street watchdogs turn to A.I

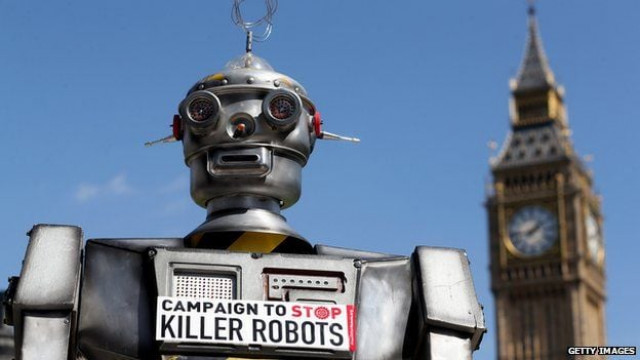

“There’s so much fear out there about killer robots and Skynet,” the murderous artificial intelligence network of the “Terminator” movies, he said. "That’s not the way we envision it at all. When it comes to decisions over life and death, there will and should always be a man in the loop."

Hundreds of scientists and experts warned in an open letter last year that developing even the dumbest of intelligent weapons risked setting off a global arms race. "The result would be fully independent robots that can kill, and are cheap and as readily available to rogue states and violent extremists as they are to great powers," the letter said. "Autonomous weapons will become the Kalashnikovs of tomorrow.”

"Other countries are not far behind, and it is very likely that someone would eventually try to unleash something like a Terminator," Gen Selva said, invoking what seems to be a common reference in any discussion on autonomous weapons.

Alarming speculations

Such weapons automation, however, has prompted an intensifying debate among legal scholars and ethicists. The questions are numerous, and the answers contentious: Can a machine be trusted with lethal force? Who is at fault if a robot attacks a hospital or a school? Is being killed by a machine a greater violation of human dignity than if the fatal blow is delivered by a human?

A Pentagon directive also cedes that autonomous weapons "must employ appropriate levels of human judgment." Scientists and human rights experts say the standard is far too broad and have urged that such weapons be subject to "meaningful human control."

COMMENTS

Comments are moderated and generally will be posted if they are on-topic and not abusive.

For more information, please see our Comments FAQ