The world's largest social media network said it plans to integrate its existing suicide prevention tools for Facebook posts into its live-streaming feature, Facebook Live, and its Messenger service.

Artificial intelligence will be used to help spot users with suicidal tendencies, the company said in a blogpost on Wednesday.

Facebook developing artificial intelligence to flag offensive live videos

In January, a 14-year-old foster child in Florida broadcast her suicide reportedly on Facebook Live, according to the New York Post.

Facebook is already using artificial intelligence to monitor offensive material in live video streams.

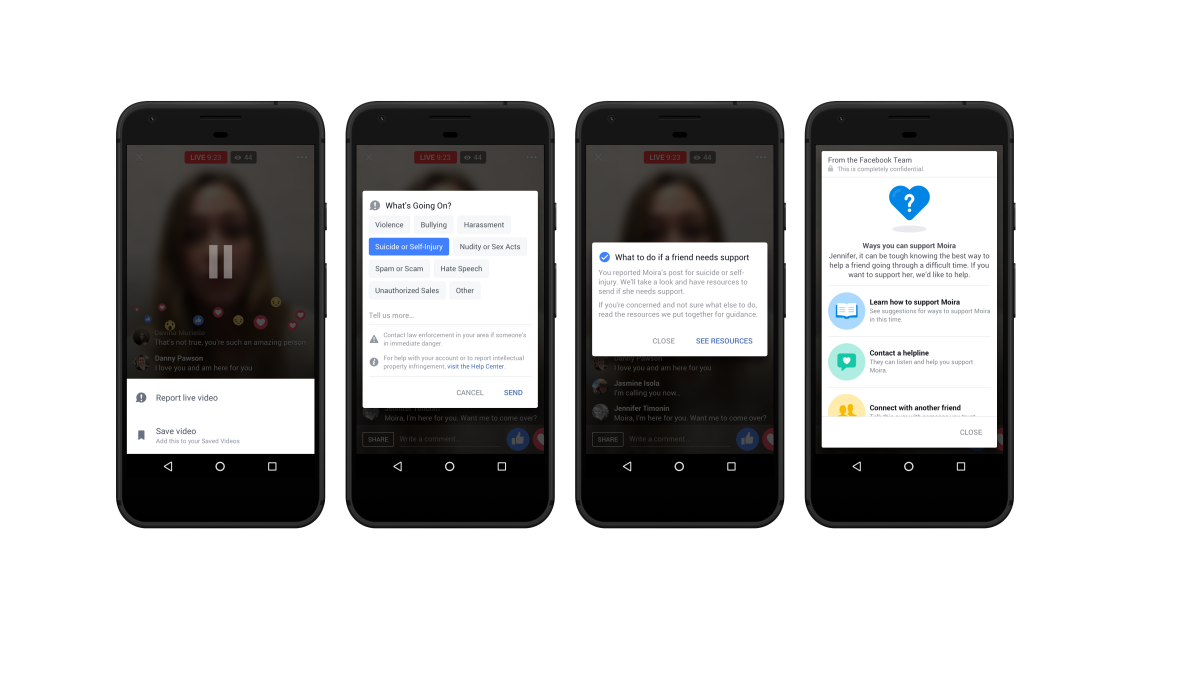

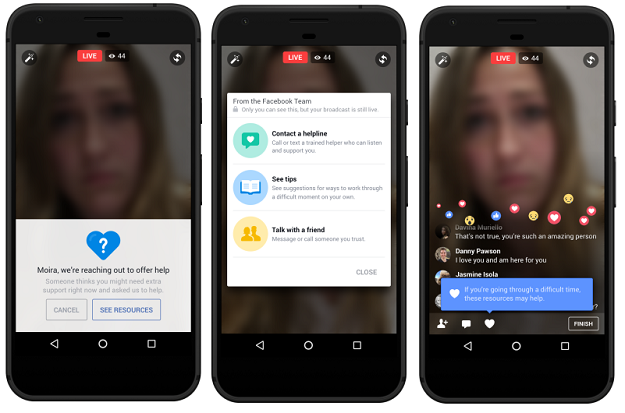

PHOTO: FACEBOOK

PHOTO: FACEBOOKThe company said on Wednesday that the updated tools would give an option to users watching a live video to reach out to the person directly and report the video to Facebook.

Facebook to roll out new tools to tackle fake news

Facebook Inc will also provide resources, which include reaching out to a friend and contacting a help line, to the user reporting the live video.

Suicide is the second-leading cause of death for 15-29 year olds.

Suicide rates jumped 24 per cent in the United States between 1999 and 2014 after a period of nearly consistent decline, according to a National Center for Health Statistics study.

1735196035-0/beyonce-(7)1735196035-0-165x106.webp)

1735126617-0/Untitled-design-(67)1735126617-0-270x192.webp)

1735025557-0/Untitled-(96)1735025557-0-270x192.webp)

COMMENTS

Comments are moderated and generally will be posted if they are on-topic and not abusive.

For more information, please see our Comments FAQ