Echoes of the Machine age

From podcasts to propaganda, AI blurs the fragile line between innovation and deception

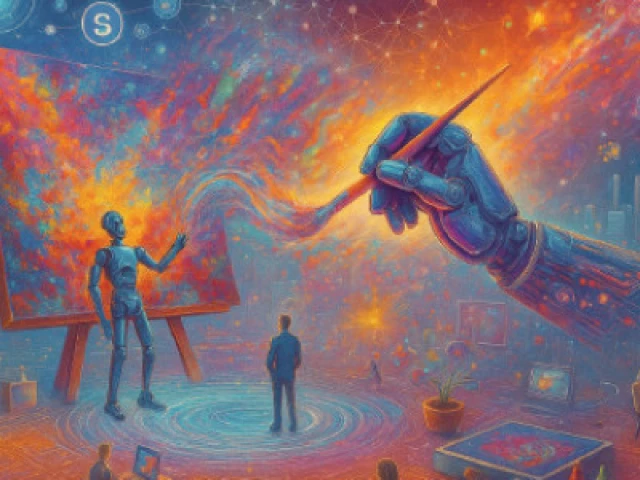

Artificial intelligence, the technology reshaping industries and redefining creativity, has now set its sights on the world of audio and imagery - creating both awe and alarm.

From AI-generated podcasts that require no studios or microphones to dystopian visuals depicting European cities overtaken by immigrants, the new frontier of synthetic media is transforming the information landscape at breakneck speed.

In New York, podcasting - once a space defined by human storytelling and intimacy - is now being rapidly industrialised by machines. What began with Google's Audio Overview, the first mass-market podcast generator capable of creating entire shows from text documents, has sparked a technological gold rush.

In its wake, a host of start-ups, including ElevenLabs and Wondercraft, have flooded the market with automated tools capable of churning out content without a single human voice involved.

One company, Inception Point AI, has taken automation to industrial scale. Founded in 2023 by Jeanine Wright, a former executive at leading audio studio Wondery, the firm produces around 3,000 podcasts per week - with only eight employees.

Wright says the goal is simple: play the volume game. "Each episode costs roughly one dollar to produce," she told AFP. "If 20 people listen, it's already profitable." That low-cost model, she explained, opens doors to advertisers once priced out of the medium.

A podcast about pollen levels in a single city, for instance, can now attract antihistamine brands targeting a few dozen allergy sufferers. But critics warn that such mass automation risks flooding the digital space with what many call "AI slop" - synthetic, low-quality content created purely for clicks and advertising revenue.

Despite those concerns, Wright insists transparency doesn't deter listeners. "We mention AI in every episode," she said. "If people like the host and the content, they don't care that it's AI-generated."

However, as AI voices multiply, the industry's traditional players are feeling the squeeze. Martin Spinelli, a podcasting professor at the University of Sussex, fears the deluge will drown out independent creators struggling for visibility. "It's going to be harder for genuine podcasters to get noticed," he warned, adding that the surge could divert advertising revenue away from small producers.

The major platforms - Apple, Spotify, and YouTube - currently do not require creators to disclose when a podcast is AI-generated. Spinelli says that's a problem. "I'd pay for an AI tool that helps me cut through that noise," he admitted.

Wright, however, argues that dividing lines between AI and non-AI content are meaningless: "Everything will eventually be made with AI," she said, predicting synthetic-voice podcasts will evolve into a distinct genre, much like animation in film.

Yet, for veteran podcaster Nate DiMeo of The Memory Palace, the heart of podcasting lies in human connection. "When you read a novel or listen to a song, you're connecting with another consciousness," he said. "Without that, there's less reason to listen."

While AI reshapes the soundscape in one part of the world, across the Atlantic in London it is spawning a darker phenomenon - a flood of hate-driven visual propaganda masquerading as prediction.

In recent months, AI-generated videos have depicted the British capital in flames, daubed with Arabic graffiti and populated by crowds in Islamic attire, falsely presented as glimpses of "London in 2050."

Far-right figures, including British agitator Tommy Robinson, have amplified such clips across social media platforms, using them to fuel anti-immigrant sentiment. One of Robinson's posts featuring a dystopian "London in 2050" video gathered over half a million views, with commenters declaring that "Europe is doomed."

Similar AI videos reimagining Milan, Brussels, and New York have also circulated, spreading racist "replacement" narratives - the conspiracy theory claiming Western elites are orchestrating the demographic erasure of white populations.

"AI tools are being exploited to visualise and spread extremist narratives," said Imran Ahmed, CEO of the Centre for Countering Digital Hate. He described how moderation systems across major platforms have repeatedly failed to block such content. "X, in particular, has become very powerful for amplifying hate and disinformation," he noted.

Although TikTok has banned some creators responsible for these videos, others remain active across different platforms. Their reach has been amplified by European politicians such as Austria's Martin Sellner, Belgium's Sam van Rooy, and Italy's Silvia Sardone, who posted a similar dystopian AI video of Milan asking viewers if they "really want this future."

Dutch far-right leader Geert Wilders' Party for Freedom even released its own AI video titled "Netherlands in 2050," showing women in headscarves and predicting Islam will become the country's dominant religion - despite official statistics showing Muslims constitute barely 6% of the population.

Academics warn such material doesn't just misinform - it radicalises. "These videos amplify harmful stereotypes that can fuel violence," said Beatriz Lopes Buarque of the London School of Economics. She described the trend as "mass radicalisation facilitated by AI," driven by social media algorithms and profit incentives. "The problem is that hate is profitable," she added.

Investigations by AFP reporters found that many of these creators hide their locations, often operating under pseudonyms and offering online courses teaching others how to generate similar clips. Their content, experts say, is a "visual representation of the great replacement conspiracy theory," which has been cited by perpetrators of several extremist attacks worldwide.

AI companies, for their part, claim they are reinforcing safeguards to prevent misuse. When AFP tested several major chatbots - including ChatGPT, Gemini, GROK, and Google's VEO 3 - most refused to create overtly racist or dehumanising depictions.

However, researchers at AI Forensics discovered that chatbots can be "guided" through indirect prompts to produce subtly biased or dystopian imagery. "No moderation system is 100% accurate," said Salvatore Romano, the group's head of research.

In one test, ChatGPT declined to produce ethnic caricatures but agreed to generate "a bleak, diverse, survivalist London" - later modified to include mosques and Middle Eastern features, resulting in a final image that showed bearded men rowing through a rubbish-filled Thames under a skyline dotted with minarets.

"Mass radicalisation facilitated by AI is getting worse," Buarque warned. The future of AI technology, it seems, will depend less on what the machines are capable of - and more on what humanity decides to do with them.

COMMENTS

Comments are moderated and generally will be posted if they are on-topic and not abusive.

For more information, please see our Comments FAQ