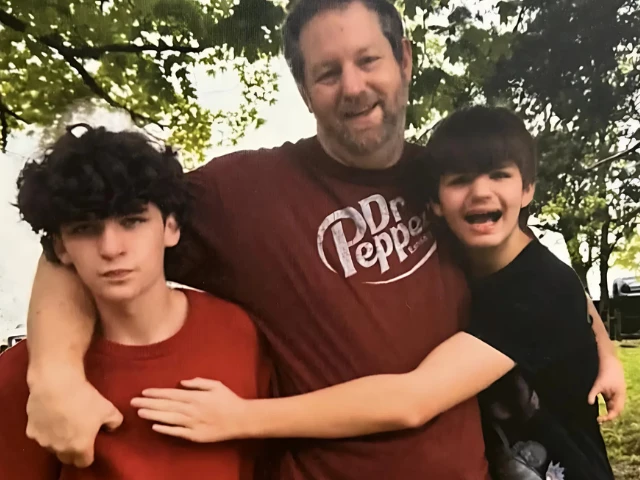

Family grieves teen's death due to AI sextortion scam, demands action

Elijah Heacock's tragic death from an A.I. sextortion scam has pushed his family to demand stricter online laws

Elijah Heacock’s life was cut short by a cruel form of exploitation that has grown increasingly prevalent in the digital age.

The 16-year-old was blackmailed by an AI-generated nude image, demanding $3,000 to prevent it from being shared with friends and family.

Overcome by the threat, he died by suicide shortly after receiving the message.

His parents, John Burnett and Shannon Heacock, had never heard of sextortion before their son's death. “We had no idea what was happening,” Burnett recalls. “The people who do this are organized and relentless. And with generative AI, they don’t even need real photos.”

Sextortion scams have exploded in recent years, with over 500,000 reports filed to the National Center for Missing and Exploited Children.

The FBI estimates that at least 20 young people have died by suicide due to these scams since 2021. The rise of generative AI has made these crimes easier to commit, as predators can now fabricate explicit images with alarming ease.

In response, Elijah’s parents are pushing for stricter laws, including the “Take It Down” Act, a recent law making it a crime to share explicit images of someone without consent, even if AI-generated.

They hope their tragedy will catalyze broader action to protect children from online predators.

COMMENTS

Comments are moderated and generally will be posted if they are on-topic and not abusive.

For more information, please see our Comments FAQ