AI constructs accurate face portraits using voice recordings

Researchers have created an AI software that uses short voice clips of speakers to generate an accurate portrait.

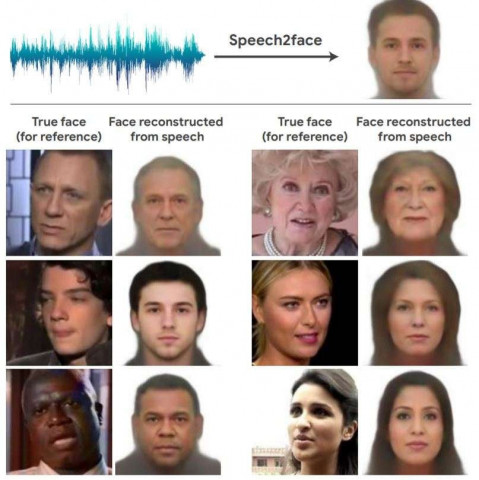

AI researchers have created a program that creates a picture portrait of a person using just a short voice recording of the person speaking. The scientists at MIT’S Computer Science and Artificial Intelligence Laboratory (CSAIL) first published a paper on AI algorithm called Speech2Face in 2019, which had produced surprisingly accurate results.

The researchers designed and trained a neural network with the help of millions of YouTube videos of people talking on the internet. The AI, whilst undergoing training, learnt the corelation between the indivtual speaking and how they looked, with special regard to gender, ethnicity and age.

The study had the least involvement of humans in trying to create the AI algorithm, which learnt on its own with a trove of videos and determining the corelation between the speaker's voice and their appearance. In an effort to analyze the accuracy of the portraits the AI was constructing, the researchers built a 'face decoder' that created standard reconstruction of a person's face using a still frame, ignoring other irrelevant features like light in the picure. The scientists were easily able to compare the voice reconstructions with the actual facial features of the speaker.

| Original image (reference frame) |

Reconstruction from image |

Reconstruction from audio |

Original image (reference frame) |

Reconstruction from image |

Reconstruction from audio |

||

|

|

|

|

|

|

||

| Input speech |

|||||||

|

|

|

|

|

|

||

| Input speech |

|||||||

|

|

|

|

|

|

||

| Input speech |

|||||||

|

|

|

|

|

|

||

| Input speech |

|||||||

|

|

|

|

|

|

||

| Input speech |

|||||||

|

|

|

|

|

|

||

| Input speech |

|||||||

|

|

|

|

|

|

||

| Input speech |

|||||||

|

|

|

|

|

|

||

| Input speech |

While the results were strikingly accurate, the AI did perform poorly when presented with factors like accent, spoken language, and voice pitch which led to “speech-face mismatches" that appeared in incorrect age, gender or ethnicity guesses. People with high voices were generally identified by the AI software to be females, while low pitched voice was categorized as male. Similarly, an Asian man speaking english rather than chinese, created incorrect ethnicity guesses.

|

|

|

|

|

| (a) Gender mismatch | (c) Age mismatch (old to young) | |||

|

|

|

|

|

| (b) Ethnicity mismatch | (d) Age mismatch (young to old) | |||

In their paper, the researchers wrote that “Our reconstructed faces may also be used directly, to assign faces to machine-generated voices used in home devices and virtual assistants.”

While the paper admitted to the study's ethical weaknesses and issues regarding just using a selected few videos on the intrenet for their date, the researchers are optimistic that "a more comprehensive view of voice face correlations can open up new research opportunities and applications.”

COMMENTS

Comments are moderated and generally will be posted if they are on-topic and not abusive.

For more information, please see our Comments FAQ