Facebook users may not be learning about jobs for which they are qualified because the company’s tools can disproportionately direct ads to a particular gender “beyond what can be legally justified,” university researchers said in a study published on Friday.

According to the study, in one of three examples that generated similar results, Facebook targeted an Instacart delivery job ad to a female-heavy audience and a Domino’s Pizza delivery job ad to a male-heavy viewership.

Instagram is preventing some users from posting feed photos to stories

Instacart has mostly female drivers, and Domino’s mostly men, the study by the University of Southern California researchers said.

In contrast, Microsoft Corp’s LinkedIn showed the ads for delivery jobs at Domino’s to about the same proportion of women as it did the Instacart ad.

“Facebook’s ad delivery can result in skew of job ad delivery by gender beyond what can be legally justified by possible differences in qualifications,” the study said. The finding strengthens the argument that Facebook’s algorithms may be in violation of US anti-discrimination laws, it added.

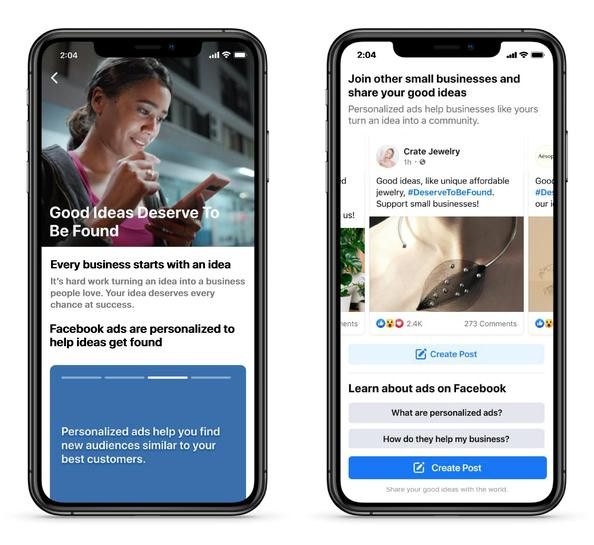

Facebook spokesman Joe Osborne said the company accounts for “many signals to try and serve people ads they will be most interested in, but we understand the concerns raised in the report.”

Facebook faces lawsuits that could force sale of Instagram, WhatsApp

Amid lawsuits and regulatory probes on discrimination through ad targeting, Facebook has tightened controls to prevent clients from excluding some groups from seeing jobs, housing, and other ads.

But researchers remain concerned about bias in artificial intelligence (AI) software choosing which users see an ad. Facebook and LinkedIn both said they study their AI for what the tech industry calls “fairness.”

LinkedIn engineering vice president Ashvin Kannan said the study’s results “align with our own internal review of our job ads ecosystem.”

1732256278-0/ellen-(1)1732256278-0-165x106.webp)

1725877703-0/Tribune-Pic-(5)1725877703-0-165x106.webp)

1732259816-0/Express-Tribune-(4)1732259816-0-270x192.webp)

1727160662-0/Google-(2)1727160662-0-270x192.webp)

1732085354-0/insta-(1)1732085354-0-270x192.webp)

COMMENTS

Comments are moderated and generally will be posted if they are on-topic and not abusive.

For more information, please see our Comments FAQ