Meta has apologized for a recent influx of graphic and disturbing content on Instagram Reels, after users reported seeing videos featuring violence, gore, and disturbing imagery on their feeds.

The company confirmed it was the result of an "error" and said the issue had now been resolved.

"We have fixed an error that caused some users to see content in their Instagram Reels feed that should not have been recommended," a Meta spokesperson said in a statement to Business Insider on Wednesday.

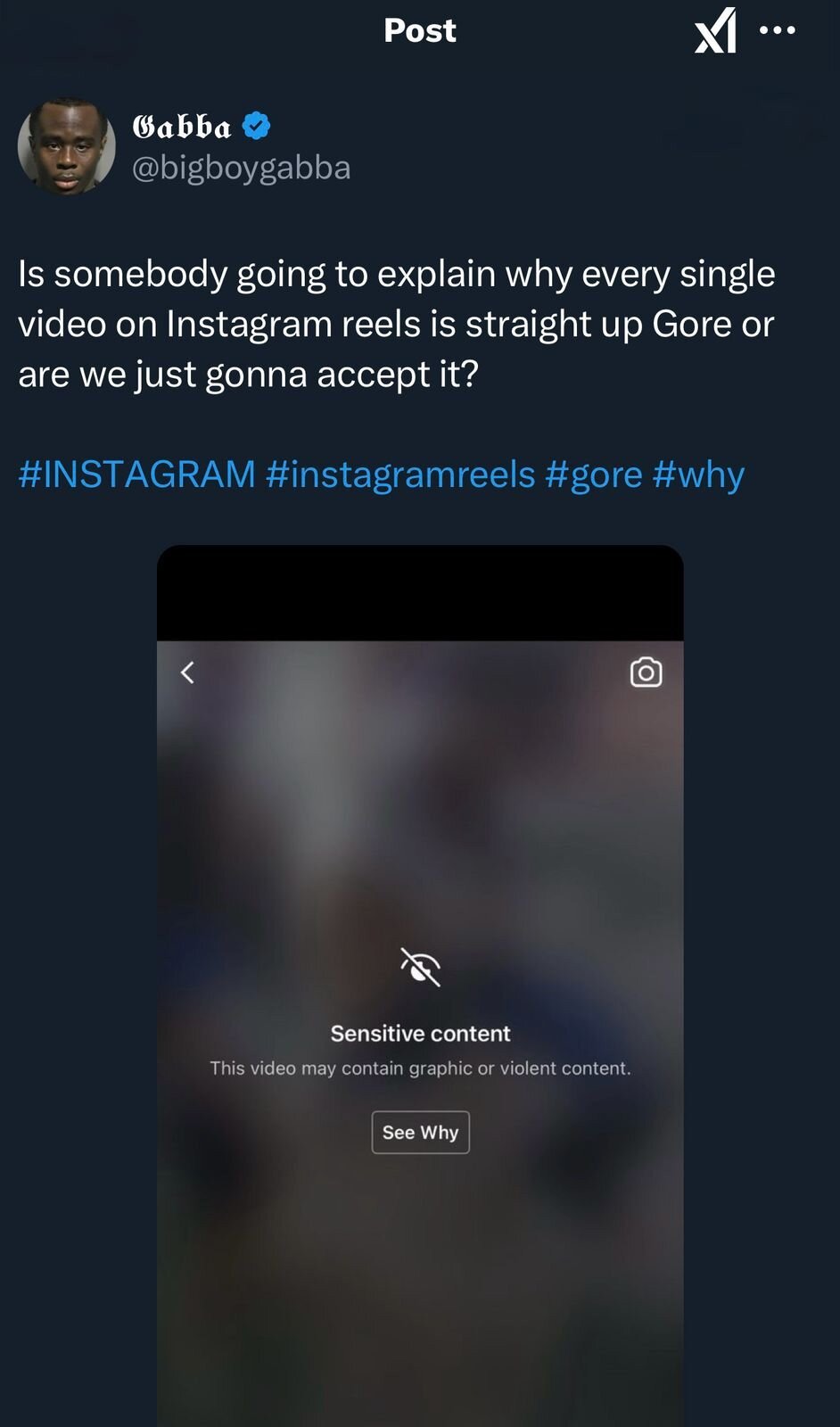

This apology came after numerous Instagram users took to social media platforms to voice concerns about the disturbing content flooding their Reels, with some even claiming they encountered such material despite having set their "Sensitive Content Control" to the highest moderation setting.

The videos in question, which included disturbing scenes of violence, killings, and cartel-related content, were marked with the "sensitive content" label.

Despite this warning, many of these clips were recommended back-to-back, creating a distressing user experience.

Meta's policies typically remove content that is particularly violent or graphic and applies warning labels to others. The company also restricts users under 18 from viewing such content.

The issue arose on Wednesday, when users around the world began reporting that graphic content was showing up in their Instagram Reels, with many questioning how this material had bypassed Meta’s content moderation systems.

Users began demanding answers. One user tweeted, "Is somebody going to explain why every single video on Instagram reels is straight up Gore or are we just gonna accept it?"

Another user chimed in on X (formerly Twitter), "...I'm only getting flights, assaults, d*aths, and inappropriate content." Yikes!

According to Meta, its platform aims to protect users from violent and graphic material, and content that violates this standard, such as "dismemberment, visible innards, or charred bodies," is typically removed.

The company also adds limitations to content that may be deemed inappropriate but still serves a purpose in raising awareness about human rights issues, armed conflicts, or terrorism. These posts may appear with warning labels.

"Prohibited content includes videos 'depicting dismemberment, visible innards or charred bodies,' as well as content that contains 'sadistic remarks towards imagery depicting the suffering of humans and animals,'" said Meta’s spokesperson.

Despite this, the surge of graphic content caught many users off guard. The incident has added to Meta's long-running controversies surrounding content moderation on its platforms.

Since 2016, Meta has faced various criticisms regarding lapses in its content moderation efforts, including its role in spreading misinformation and facilitating illicit activities, like drug sales. Additionally, its failure to curb violence in regions like Myanmar, Iraq, and Ethiopia has drawn significant scrutiny.

Meta’s recent decision to shift from using third-party fact-checkers to a new "community notes" flagging model has raised questions about its commitment to addressing these issues.

The company has also made adjustments to its content policies, including simplifying restrictions on topics like immigration and gender, which were deemed out of step with mainstream discourse.

In light of the recent error, Meta assured users that it had taken corrective measures.

"We apologize for the mistake," the company said. The company also confirmed that it would continue to work toward enhancing its content moderation efforts to better protect users from inappropriate material.

COMMENTS

Comments are moderated and generally will be posted if they are on-topic and not abusive.

For more information, please see our Comments FAQ