As the year 2023 came to a close, Bill Gates had some predictions to share for this year. On a blog he posted on his personal portal GatesNotes, the billionaire philanthropist and founder of Microsoft believed 2024 would be the year artificial intelligence would “supercharge the innovation pipeline.”

“This year gave us a glimpse of how AI will shape the future, and as 2023 comes to a close, I’m thinking more than ever about the world today’s young people will inherit,” wrote Gates. “This is an exciting and confusing time … We now have a better sense of what types of jobs AI will be able to do by itself and which ones it will serve as a copilot for … [but] if you haven’t figured out how to make the best use of AI yet, you are not alone.”

The past year did at times feel like the year of AI, when our minds were not drawn to conflict and chaos raging elsewhere. A range of platforms and products, from ChatGPT to Midjourney – AI chatbots to AI image and voice generators saw rapid adoption and discussion. For how novel it was, in an instant it felt like the technology was here all along.

However, this swift progress also stirred a slew of anxieties about the future of AI. The likes of Elon Musk and Sam Altman, the CEO of ChatGPT developer OpenAI, warned that the technology could very well spell the end of humanity.

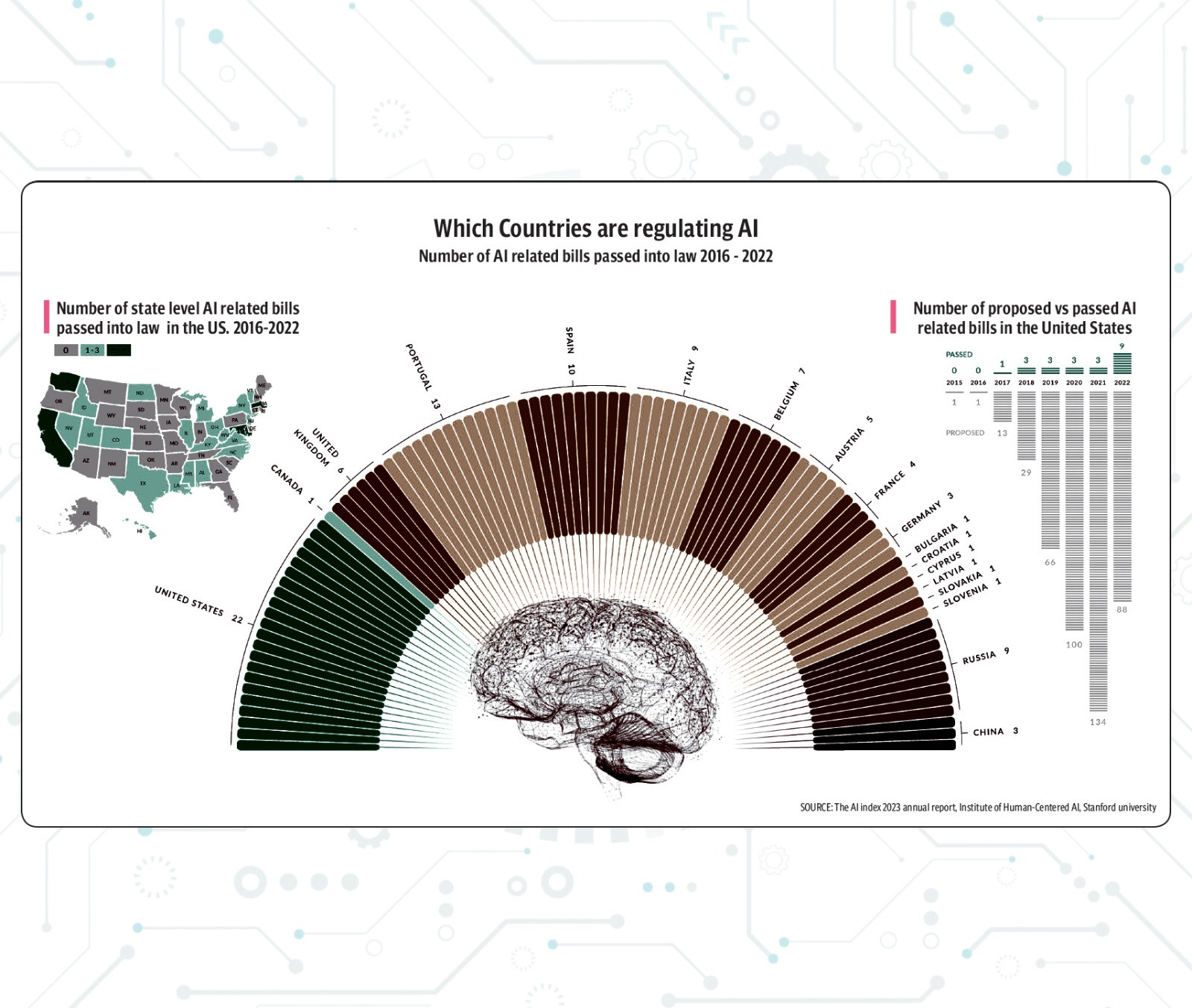

By late 2023, the United Kingdom took initiative by hosting a highly anticipated global AI security summit. The moot, which brought together over a hundred stakeholders from 28 countries, urged governments and developers to collaborate on regulating the technology before it spiraled out of control.

Indeed, aside from the AI doom-mongering, there were some signs of things being 'out of control' from ethical, legal, and privacy-related perspectives. For instance, just this week, the White House sounded the alarm after a series of explicit, AI-generated images of pop megastar Taylor Swift spread quickly on social media. The incident added another layer of concern to a list of challenges women continue to face in the digital realm.

Similarly, the same technology has also been used to amplify hate and stereotypes against particular races and communities at a time when radicalism is on the rise, particularly in the West. With elections set to be held in more than 60 countries this year, the threat posed by AI-generated misinformation poses an even bigger concern.

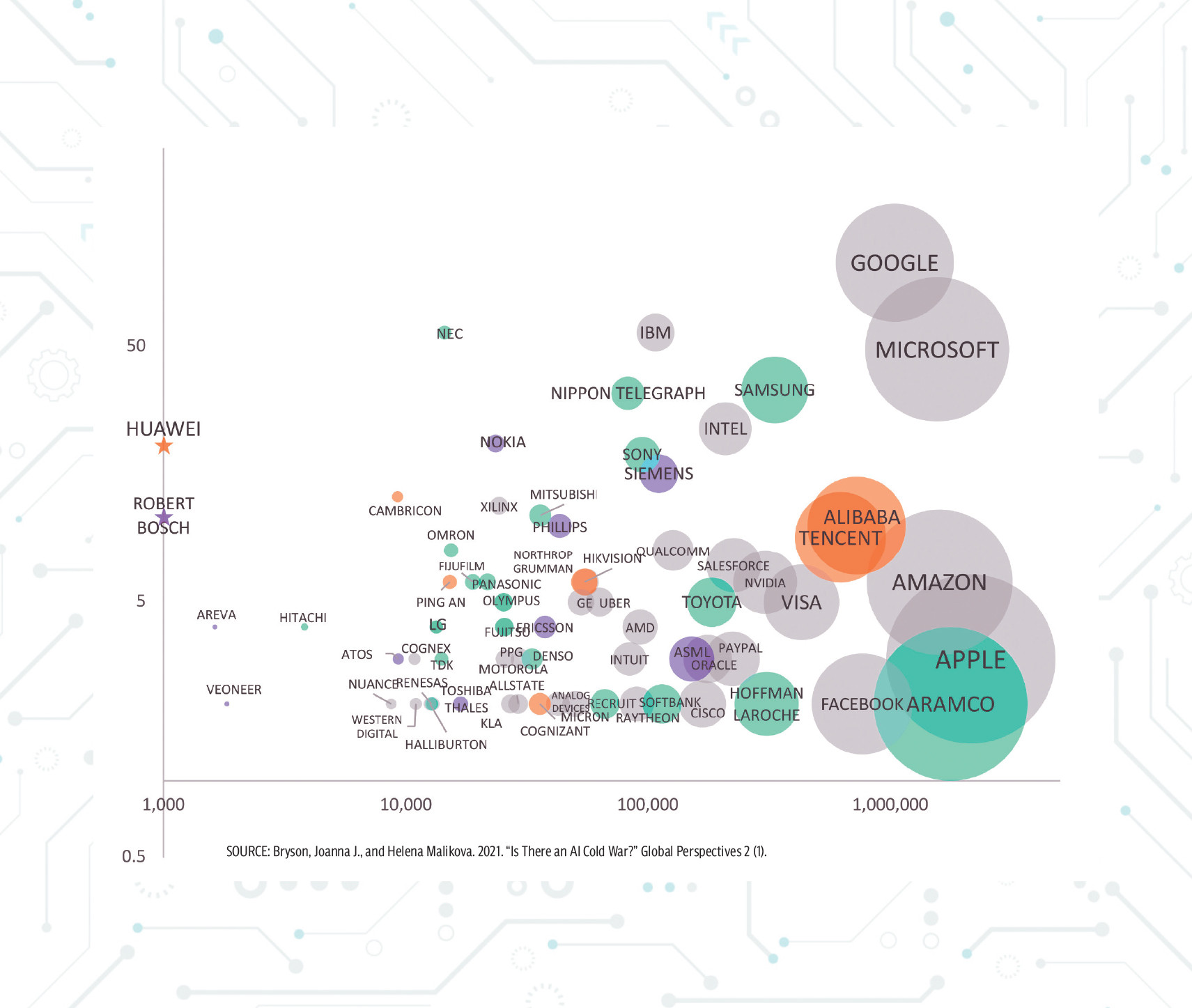

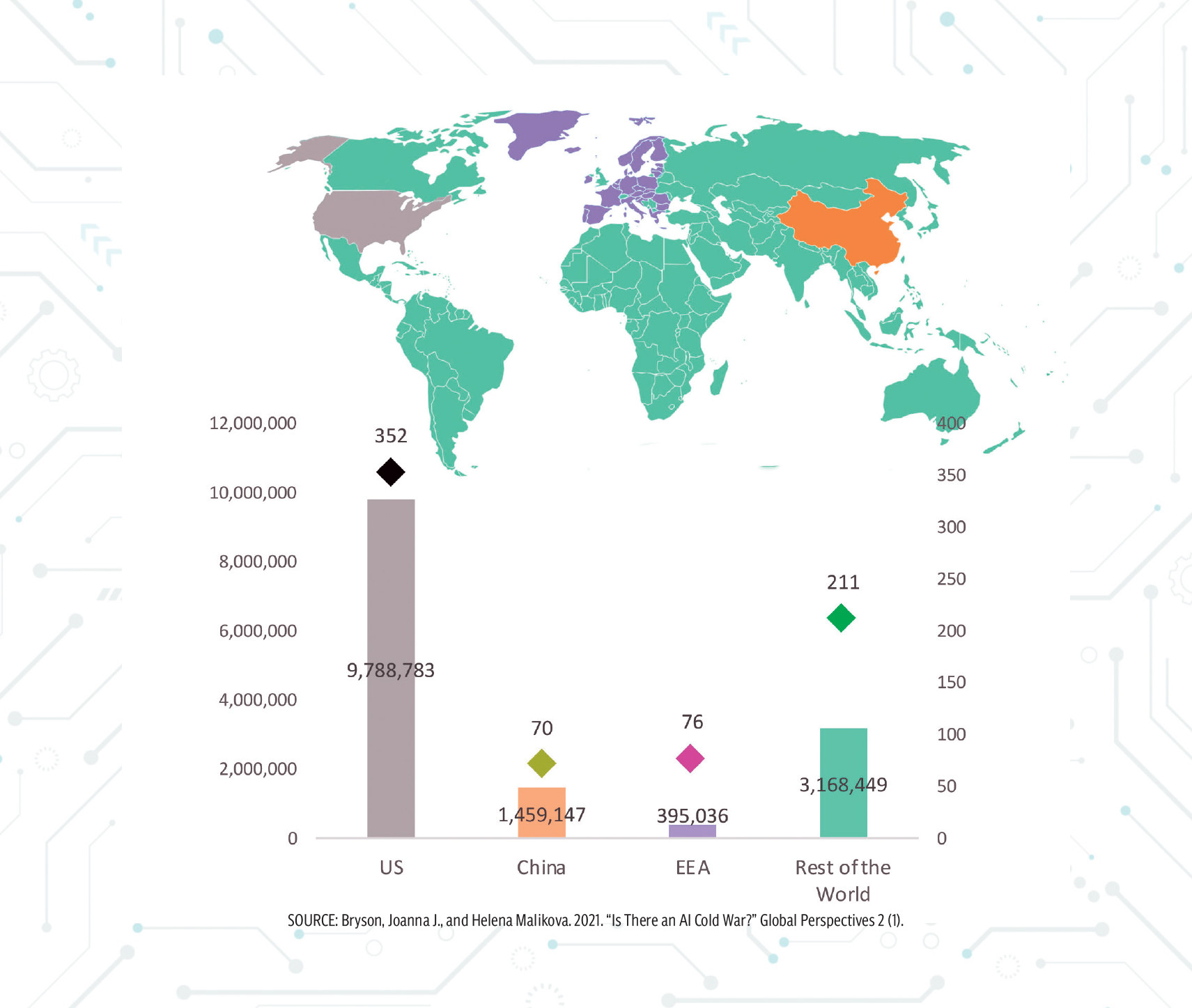

At the same time, the role of governments centre-stage in the global AI discourse also demands scrutiny. While much of the misinformation debate focuses on malicious non-state actors, in an increasingly polarised world it is harder to determine whose narrative to believe. Given the disparity in AI research among nations, could we really trust government regulation? What concerns could the power concentrated in a handful of firms and developers, often dominated by a single enigmatic figure like Musk, Facebook's Mark Zuckerberg, or once Gates himself, pose to the rest of the world?

As we look ahead this year, The Express Tribune reached out to experts in the field of AI research to share their thoughts on the state of the technology and the various issues arising from it.

Unpacking the hype

The world of AI, as soon as it hit the mainstream, has found its share of hype-men (and women). But remarkable as the technologies currently available are, how do they work exactly? For most lay people, AI triggers visions from science-fiction – beings and intellects that are near human if they don’t surpass humans already. Do the tools available to us match up to that expectation or are we letting flights of human fantasy cloud our judgement.

Speaking to The Express Tribune, Dr Asad Anwar Butt, an associate research scientist in the Department of Computer Science at Johns Hopkins University, contextualised the current AI landscape. "The current AI hype is based on the development of generative AI models, such as large language models (LLMs). These models, trained on extensive datasets, have captivated public imagination with their impressive capabilities,” he observed.

However, Dr Butt cautioned against overlooking the inherent shortcomings of these models: "All the state-of-the-art models have the same underlying technologies and it remains to be seen how far this will take us, since training data is already a big limiting factor.” According to him, while the state of AI is encouraging, there is no reason to believe that this is the path towards ‘artificial general intelligence’ (AGI) – the hypothetical type of intelligent agent that could learn to accomplish any intellectual task that humans or animals can perform.

For Dr Joanna Bryson, a professor of ethics and technology at The Hertie School of Governance in Germany, many of the seemingly far-fetched expectations and anxieties about AI were unfounded, at the moment at least. Regarding the latter in particular, she drew attention to similar concerns when computers became accessible to average consumers. “In the 80s, there were people who wondered ‘is it right we turn our computers off… wouldn’t robots in the future be mad that we turned off their predecessors?’” she shared.

That said, she did voice concern about certain beliefs and expectations among some prominent figures in the AI world: “I’m really worried about the number of very senior people I’ve met who have these weird hopes that AI will scrape all knowledge somehow, as if all knowledge was already out there somewhere.” Touching on the philosophical aspect, Dr Bryson said that while AI even in its present form could sometimes stumble onto ‘a space human culture hasn’t reached yet’, it is far-fetched to assume that all current human knowledge is even available on the Internet for it to extrapolate.

Misinformation and technological education

Shedding light on the intricate relationship between AI and misinformation, Dr Jutta Weber, a media theorist and professor at the University of Paderborn, identified a critical gap in technological education. "The problem with AI now is I think a problem of technological education, because most people are not aware how easily things like photos and videos can be manipulated,” she explained.

She underscored the role of AI in amplifying existing challenges associated with digital technologies, remarking, "What these tools have basically done is perhaps not present any new sort of challenges as much as they've made these older challenges that existed with digital technologies somewhat trickier and more frequent." Anticipating a further sharp increase in proliferation of misinformation, she stressed the need for greater awareness and tech and media literacy.

For Dr Butt, the problem of misinformation dissemination increased manifolds with the growth of social media as it became much easier to spread unsubstantiated claims. “The use of AI has further fueled this issue since the generation of credible information has never been easier, whether it is writing in the style of someone famous, or generating fake images,” he noted.

How much should we worry?

According to Dr Butt, the existential crises being discussed were somewhat far-fetched at this point given the current state of AI. However, he felt the increase in misinformation/disinformation using some of the available AI tools was a major worry. “The AI Safety Summit and similar discussions have touched on a number of concerns regarding the development of AI. A major source of concern is state-sponsored campaigns due to the resources available to them. Other concerns include these technologies being used to discriminate against already disadvantaged groups and erosion of privacy by authoritarian governments,” he said.

A key challenge could also be present in the framing of events and discourse in a world dominated by deepfakes and AI-generated misinformation. “Everyone is very worried about the amount of fake content that's being generated … but that might be easier to solve,” said Dr Bryson. “There will be as much trouble with people claiming they didn't say things as people claiming they did. No one could trust random video … it’s like going back a century, video is not evidence anymore,” she added.

Dr Weber, meanwhile, drew attention to the dangers of viewing AI technology as infallible. “If you know a little bit about AI, you know what you need to do,” she said. “But those people who don't know, they are always like ‘wow, this is unbelievable’. And I think this gives space for this AI religion. It becomes intriguing for people to believe more and more in this powerful technology.”

State misinformation and bias

While acknowledging that state sponsored misinformation will always be a bigger threat, Dr Butt said that challenge was distinct from the issue posed by inherent biases. “Regarding the reporting of Gaza vis-a-vis Ukraine, it is a slightly different issue, for instance… this is more related to bias,” he said in response to a question. “On a lot of issues, the west may have a different tilt than our part of the world. Companies located there, e.g., OpenAI, will ensure that the output is acceptable to their major audience. This may not be deliberate misinformation, but a world-view different from other places.”

“That's what large language models do, right? They take huge numbers and samples and then take the averages,” explained Dr Bryson. “So, It's a problem for the minority position. It generates some bias”

“Since every governing body has some inherent bias, I am not sure if a truly unbiased system is even possible,” Dr Butt added. On the other hand, he identified deliberate misinformation, such as to interfere in the electoral process of another country, as a more serious problem. “Recent years have seen a growing number of accusations of misinformation campaigns by enemy states. The US accused Russia of meddling in its elections in 2016. In return, Russia has accused the US of misinformation regarding the war in Ukraine.”

Asked about the challenges of misinformation propagated by non-state actors versus that which might be initiated or propagated by governments using AI, Dr Butt pointed to scale as the biggest factor. “The resources of states or large political groups will be substantially more than some misguided actors. Governments, in general, will have more resources, which results in access to better systems, more prompt engineers, and better computation resources,” he said.

However, even if most non-state actors are unable to compete, that does not mean that they cannot cause substantial damage themselves, Dr Butt pointed out. He added that the discourse may also revolve around non-state actors because it is nearly impossible that the state actor will ever accept responsibility.

Talking about the motives for misinformation, Dr Butt believed they may be the same for state or non-state actors: “to cause confusion, fear, or chaos among the target population.” In any case, he noted that it is very difficult for the general public to be sure of the information being provided to them. “The more diligent may try to use multiple sources from different political spectrums, but it is not possible for a majority to do this.”

He stressed that combating misinformation requires tech companies to find ways to mark generated output and identify it as such. “Governments will need regulatory frameworks and hold accountable any person or group spreading misinformation. This will need to be an effort that involves multiple actors.”

Regulation and accountability

Both Dr Butt and Weber emphasise the pivotal role of government regulation in mitigating the risks associated with AI-driven misinformation. While the former acknowledged the potential for regulatory overreach, he asserted the necessity of concerted efforts to address the challenges at hand.

“Government regulation always comes with the risk of overreach. Big corporations like Google and OpenAI are trying to partner with governments on this, and some would argue have been at the forefront of creating 'end of the world' hysteria,” said Dr Butt. “This leads to a bigger problem of monopolisation as larger corporations try to maintain their control and thwart the so-called democratisation of AI.”

“Still, some sort of government regulation will be needed to keep AI-related misinformation in check. Any such effort will need unanimous support to be effective. As of now, I am unsure as to the best way to go about this,” he shared, adding that he felt the Executive Order on AI by the US President is a good starting point on the risks posed by the technology.

Dr Weber advocated for transparency and accountability in AI development and deployment. "In the best of all worlds, you would have AIs which are openly developed, where you could access the code," she said, emphasising the importance of open access to AI algorithms and data to facilitate scrutiny and mitigate biases.

On the topic of transnational treaties to regulate AI use, Dr Butt emphasised the intricate nature of such agreements, particularly concerning the distinction between state and non-state actors. He highlights the difficulty in attributing responsibility, as non-state actors often find themselves implicated in state-sponsored activities. "Any agreement will need to handle this within a defined scope," Dr Butt remarked, acknowledging the daunting task of reaching consensus among nations with varying interests and agendas.

For Dr Weber, the challenge lies not only in reaching consensus but also in defining the parameters of AI itself. "How do we define AI? How long would it take to find a global regulation on the UN level?" she asked, adding that she finds a glimmer of hope in recent developments, such as the EU AI Act. While not without its flaws, she sees it as a step that guides the conversation towards greater transparency and accountability.