Technology, as an enabler, has always played an integral role in human development, consistently evolving and adapting to enhance our quality of life. In recent months, Artificial Intelligence has emerged as one technological breakthrough that has rapidly and profoundly impacted our lives, reshaping and revolutionising our daily routines and activities.

The swift progress AI has made has generated both excitement and apprehension due to its potential influence. It is undeniably reshaping industries and influencing how we live and work in numerous ways. While it offers numerous advantages, it also raises concerns about ethical considerations, job displacement, and the responsible use of AI. As AI continues to advance and shape the future of technology, finding a delicate balance between harnessing its potential and addressing the associated risks will be of paramount importance.

Navigating digital perils

In this evolving landscape, both experts and average Internet users have voiced deep concern over the ways AI can exacerbate the existing challenges of fake news, misinformation and harmful content. As Asad Baig, the founder of Media Matters for Democracy (MMfD), pointed out, one major fear revolves around generative content, commonly known as deepfakes. “Deepfakes have the potential to be highly dangerous, especially in an environment where digital literacy is low, and historical cultural and political biases prevail,” he said.

Baig highlighted the peril by sharing an example of a study that explored a piece of software capable of ‘automated image abuse’. This software could digitally manipulate or create explicit images of random women using AI. The challenge, he said, lies in finding effective responses to this problem. “For instance, China is one of the first countries to regulate generative content, requiring subjects' consent and the use of watermarks to indicate manipulated content. However, given that such laws can be easily used to curtail free speech, they open up a dangerous slippery slope.”

The AI algorithms used by major social media platforms can also amplify hate by corralling users into dangerous echo-chambers, the MMfD founder warned. “Take the Facebook Papers, which revealed that Facebook in India has not curtailed anti-Muslim content and views proliferating on the platform.”

According to Baig, generative software is likely to be increasingly accessible in the form of user-friendly apps as time goes on. This, in turn, could turn content moderation into a serious problem. “A cohesive policy response is needed to address this on a global scale,” he stressed.

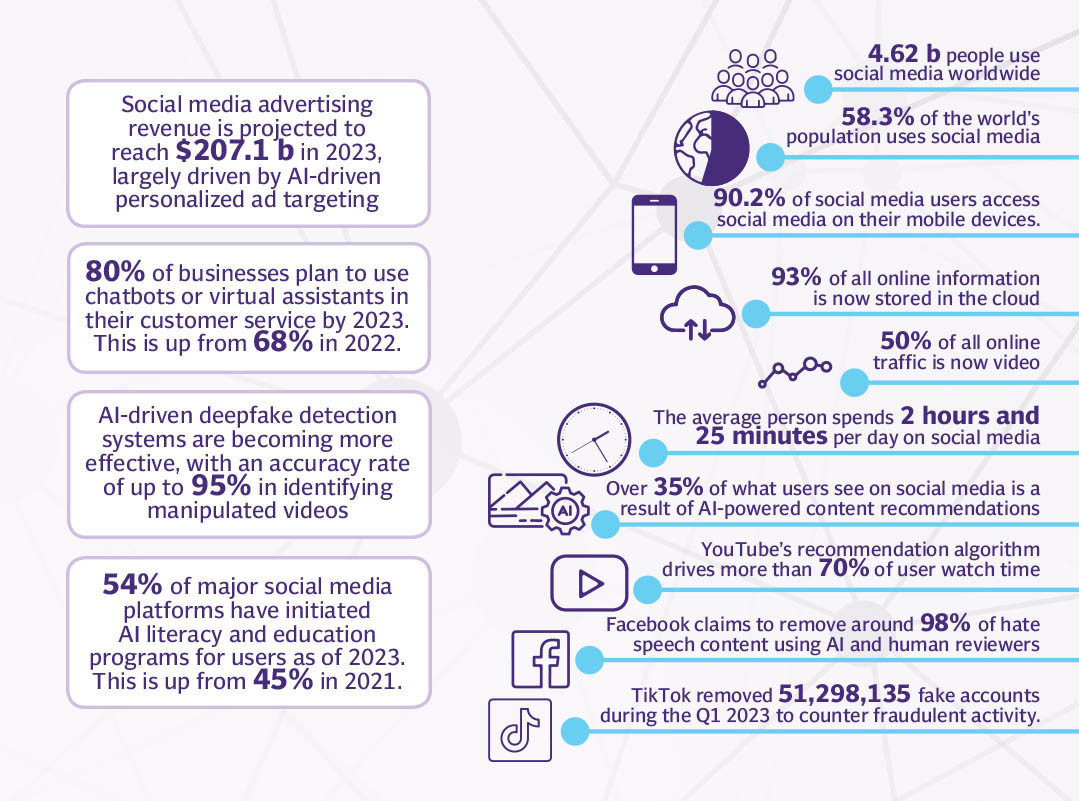

Distinguishing between misinformation and fake news involves distinct efforts aimed at preventing the misuse of technology, and many social media platforms have been actively working on regulation in these areas. “There have been efforts to utilise AI-based systems to detect generative content; essentially using AI to fact-check AI,” the MMfD founder shared. “But then again, traditional communication theories suggest misinformation tends to spread more rapidly than credible information,” he added. “I feel a comprehensive plan built around [information] inoculation techniques is needed for the masses to minimise the fallout.”

Baig added that it is also extremely important that platforms are held to account and transparency is ensured in their algorithms. “Whistleblowers and researchers have provided substantial evidence that certain social media companies' AI-based algorithms are exacerbating the spread of hate. The issue at hand is the lack of accountability in this regard. Instead of just educating the creators, there's a pressing need for platforms to take responsibility for this specific problem.”

While AI has undoubtedly simplified content creation through tools ranging from generative text to automated videos, challenges persist nonetheless. AI's impact on the performing arts and artists is one growing concern. “Bollywood star Anil Kapoor just won a case to prevent his likeness from being generated through AI. Hollywood actors have also been on strike to demand safeguards from AI after an AI-generated likeness of actress Salma Hayek was used on a show,” Baig pointed out. “While generative content holds promise, it could potentially reshape the content we consume in ways that may not necessarily be for the better,” he cautioned.

Content explosion

Where AI offers enhanced speed and scalability in content creation, it presents fresh challenges related to creativity, quality, bias and ethics. Striking the right balance between AI and human input in content development is vital to harness the advantages while mitigating the drawbacks. Ultimately, the effectiveness of AI in content creation hinges on the specific case in question, the quality of AI models and the careful management of ethical and quality issues.

“AI has been a gamechanger for many content creators through three significant tools: captions, dubbing and eye contact correction,” shared creator Syed Aun, who quickly adopted AI tools for the video content he produces. “These three tools are not only helping us save time have enhanced the quality of our work,” he stressed. “Take AI-generated captions – not only do we no longer spend hours transcribing our videos, automatic captions in other languages are helping creators like me reach an audience beyond those that understand Urdu or Hindi,” Aun highlighted. The time saved, meanwhile, could be put back into other production tasks like research, scripting and editing.

Highlighting the sophistication of the AI tools he uses, Aun shared how within just four to five video projects, the language model understood his speech patterns down to regional slang. “In one video, I used a word that exists neither in formal Urdu or English. But the AI generator I used produced a caption that captured the exact meaning of what I was trying to say.”

Talking about how AI is helping expand his reach, Aun pointed to AI dubbing tools. “I don’t have to make separate videos and I don’t have to hire different translators for thousands of rupees per project. An annual subscription to an AI dubbing tool for $100 allows me to dub my video into any language I want,” he said. “The voice model will no longer sound ‘robotic’ either. The dubbing will be modeled on my voice if I want. If I want a video dubbed in Arabic, it would sound as if I was speaking Arabic.”

Some AI tools that Aun emphasised as immensely beneficial for newcomers pertain to maintaining eye contact. “When people are just starting their video-making careers, it can be challenging to deliver lines while looking at scripts or teleprompters, making the content appear unnatural and unappealing. With AI, there's the option to generate scripts through the same app and record,” he elaborated. "There are apps that turn your phone into a teleprompter while the camera continues to record normally. However, even with this, some people had issues because it looked like the subject was reading. To address this, AI steps in during video production to correct eye contact, resulting in a final video where the subject appears to maintain eye contact with the viewer instead of just reading the script."

In recent years, there has been a substantial increase in the number of people engaging in content creation. The easy availability of various AI tools has further boosted this trend. In Aun’s words, “Anyone can become a content creator now.” However, while the quantity of online content is surging, the quality hasn’t necessarily kept pace. “With or without AI tools, only two out of 10 content creators are putting out meaningful work,” he said.

Even so, AI is more a blessing in Aun’s opinion and although it necessitates adaptation, he doesn’t see it disrupting the content market too much. An example he shared pertained to how AI streamlines SEO (search engine optimisation) tracking. “Content creators no longer need to manually research trends on various social media platforms. Additionally, AI proves invaluable in generating captions and identifying trending keywords,” he said.

Inherent bias

Technology plays a vital role across various industries, driving enhancements, efficiency, and innovations. With the continuous improvement of AI technology and its integration into all facets of society, its role as an enabler expands. AI's capacity to handle and analyse data, automate tasks, and enhance decision-making has the potential to reshape numerous fields, fueling progress and innovation. Nevertheless, the increasing prevalence of AI also raises substantial ethical and regulatory concerns that demand attention. Badar Khushnood, CMO of S4 digital, emphasised, "Technology is not a destination but a tool to facilitate human growth. It has evolved over time, evident in changes like bandwidth and connectivity."

Over time, innovations have empowered machines to become highly intelligent, nearly on par with human minds, and in some aspects, even surpassing them. However, the impact of this progress is a mix of positive and negative outcomes, mainly due to the two distinct approaches used for AI: machine learning and deep learning. "Machine learning is widely used, but its main drawback is the lack of empathy and quality in favour of volume and speed," explained Khushnood. He highlighted an example to illustrate this point, stating, "For a long time, in America, credit card approvals were influenced by biases where applications from people of colour were often denied by white individuals. Even today, AI tends to exhibit biases because it learns from historical data."

Integrating deep learning and synthetic data into AI models can reduce bias and improve context. However, this process demands a gargantuan volume of data, presenting a significant challenge in maintaining data privacy. “When such extensive data is used for machines to learn behaviours or medical conditions, inadequate anonymisation can jeopardise privacy,” Khushnood cautioned.

To address such concerns, it is crucial to establish proper regulation and verification processes over time. “Recent events, like the Russia-Ukraine conflict, have shown how data can be compromised across borders. This is where human responsibility comes in. How you use technology changes, for good or for bad, changes the story. A vehicle can be used as an ambulance to save lives or it could be used as a weapon to take them.”

Khushnood also stressed that no matter what technological advancements or tools are introduced to assist in saving time and creating content, the vast realm of human creativity remains unparalleled. "Machines still can't compete with the human mind," the digital expert emphasised. "After reading a few articles generated by AI tools like Chat GPT, it becomes evident where creativity falls short. While using tools for assistance is understandable, dependence on them cannot truly add value to the work." Highlighting the issue of content quality, he stressed that without wisdom in content, a surplus of material becomes meaningless. Many individuals create videos solely for monetisation, lacking a genuine purpose in their content. To address these concerns, he proposed the need for fundamental government policies that can combat biases and generalised content. Without such regulations, ever-advancing technology may lead to a chaotic path and create more problems in the future.

Guardians of authenticity

As AI-generated content becomes increasingly prevalent on social media platforms, ensuring the authenticity and transparency of content has become a paramount concern. Social media giants like TikTok are taking proactive steps to address this issue. They recognise that while AI-driven content offers incredible creative possibilities, it can potentially confuse or mislead viewers if they are unaware of its origins.

To uphold content integrity, TikTok has introduced a labeling system for AI-generated content. This system enables creators to easily inform their community when they post content that has been significantly altered or modified by AI technology. This label not only empowers content creators but also assists viewers in understanding the creative processes behind the content they consume.

One of the primary motivations behind this initiative is to comply with TikTok's Community Guidelines' synthetic media policy. This policy, introduced earlier this year, requires users to label AI-generated content that contains realistic images, audio, or video. By doing so, the platform ensures that viewers can contextualise the content and prevent the potential spread of misleading information.

These efforts aim to provide a clear and intuitive way for TikTok's diverse community to distinguish AI-generated content from human-generated content, helping to maintain authenticity on the platform. Its emphasis on these AI initiatives highlights the platform's commitment to fostering a safer, more transparent digital environment.

Now as social media platforms continue to introduce AI-driven features and content, it is equally important to educate users on recognising AI-generated content. The short-video platform, has acknowledged the significance of media literacy and is actively investing in initiatives to empower its community with the knowledge needed to identify AI-generated content.

In an effort to assist users in navigating this evolving digital landscape, TikTok has released educational videos and resources. These resources are designed to provide insights into the use of labels for AI-generated content, enhancing user awareness and understanding. Over time, these educational materials are expected to become valuable tools for both content creators and viewers, similar to the platform's verified account badges and branded content labels.

By fostering media literacy and providing the tools and knowledge needed to identify AI-generated content, TikTok is actively supporting its users in safely navigating the advancements of AI-generated content. This commitment reflects the platform's dedication to the well-being and creativity of its community.

However, despite the increase in AI literacy and education programs on social media platforms, 68% of users are still unaware that social media platforms use AI to curate content in their feeds. This is likely because social media platforms do not always make it clear how they use AI.

Empowering users

In Pakistan, TikTok has introduced a campaign to bolster awareness and understanding of its community guidelines. It also organised a series of on-ground workshops across the country where a team of experts on community guidelines enlightened creators about the platform's rulebook.

Health content creator, Dr Daniyal Ahmed, talking about the authenticity of content on the social media platform said, “There are all kinds of content available on social media. Creators who are not doctors are giving health tips which often ends up harming their followers. In this age of AI, it is really important to keep a fact-check and follow the guidelines given by the platform.”

“It is really important for the users to follow community guidelines provided by the platform, this will not only keep everyone safe but increase the quality of the content. TikTok workshop to educate the users about this in Pakistan is a game changer. It was a much needed step. The creators have to know what to keep in mind while making content in this digital age. I wholeheartedly support the idea of conducting more of these engagements. Together, we can foster a healthy, safe, and vibrant community where creativity thrives,” said Daniyal who won TikTok’s Health Creator of the Year 2022 award.

According to TikTok, which removed nearly 12 million videos from Pakistan for violating Community Guidelines in Q1 2023, the guidelines on the platform serve as a comprehensive framework that outlines the rules and norms governing platform usage. They are designed to stay adaptable in the face of evolving online trends and potential hazards, enabling the platform to effectively address the risks associated with changing user behaviors. These guidelines are continuously updated and refreshed in consultation with over 100 organisations worldwide and members of the TikTok community.

TikTok also collaborated with some of Pakistan's most popular content creators to spread awareness about its Community Guidelines. Under the hashtag #SaferTogether, the campaign provides a landing page on the platform’s application accessible to the Pakistani community, where users can view videos created by their favorite content creators. These videos emphasize the importance of comprehending these guidelines and how doing so can improve the quality of the content they produce.

To enforce these guidelines, social media platforms rely on a combination of AI and human moderators. The AI systems are responsible for identifying and removing any videos that violate the community guidelines. Following AI screening, human moderators step in to double-check and catch any content that may have been missed.

In the case of TikTok, the platform has expanded its human moderation team in Pakistan by a significant 300 per cent to ensure stringent content oversight. This enlarged team is dedicated to the meticulous review of all content, including AI-generated videos, to ascertain that nothing that breaches the guidelines finds a place on the platform. Whether a video is deemed misleading or potentially harmful, it is promptly removed to maintain the platform's standards and the safety of its users.

The rise of AI has undoubtedly transformed various aspects of our lives, from content creation to the authenticity of information on social media platforms. While AI offers incredible opportunities for efficiency and creativity, it also presents significant challenges, including ethical concerns and the potential for misuse. As AI continues to evolve and integrate into society, it is essential for both individuals and platforms to adapt, educate, and establish responsible guidelines. The commitment of platforms like TikTok to foster media literacy and enforce community guidelines is a positive step toward creating a more informed and secure digital environment. In this ever-changing landscape, the responsible use of AI remains pivotal in striking a balance between its advantages and potential hazards.