It has become a recurring phenomenon that as advancements in artificial intelligence with respect to a particular discipline reach a certain point, public discourse quickly shifts towards societal complexities and queries the technology may present. There is the fascination with anthropomorphising AI systems, wherein at times human actors at play, for better or for worse, are undermined. Such a practice sees AI less as a tool and more as an entity of its own capable of making moral judgments independently.

Researcher and lecturer in AI at King’s College London, in his paper The Rhetoric and Reality of Anthropomorphism, David Watson, talks about how the practice of ‘humanising algorithms’ not only misconstrues the mechanism behind AI systems, they can have vast ethical implications. Watson believes that this could absolve the people behind of any morally questionable actions performed by an AI system. In recent memory self-diving cars became a point of debate when it came to attributing responsibility for pedestrian casualties. If the AI system is solely to blame in the sense that it was it’s ‘own self’ then where will the accountability lie?

When it comes to creative expression generated through neural network algorithms, the conversation too has reached the point where it has moved on from the ‘awe’ of a machine supposedly creating ideas, to possible ethical and in some cases legal challenges the practice presents. Visual art developed through AI based software and platforms such as Stable Diffusion, DALL-E and Midjourney have, as of late, become embroiled in a controversy with regard to attribution.

Getty Images along with a host of other platforms have banned the sale and upload of visual content generated through AI. In an interview with the Verge, Getty images CEO Craig Peters talked about how the company is preemptively trying to tackle copyright issues that could arise. “There are real concerns with respect to the copyright of outputs from these models and unaddressed rights issues with respect to the imagery, the image metadata and those individuals contained within the imagery,” said Peters. “We are being proactive to the benefit of our customers,” he added.

The idea of contested ownership is based on the fact that AI image synthesis platforms are not exactly generating art from vacuum but rather deriving them through using existing images within a particular context. At the same time the end product can still be deemed original with it can be justifiably argued that the extensive (almost infinite) set of images used for synthesis play the part of a colour palette of sorts. Nonetheless before one enters into philosophical arguments bout AI art, it is imperative to first understand the technical nuances of how these generators work.

How do AI art generators work?

The large-scale digitisation of artwork over the past few decades has essentially laid the groundwork for AI art generators. The extensive collection allowed for development in deep learning method through which advancements could be made in the computational analysis of these images, in terms of breaking down different aspects of a visual art piece. Advancements in the use of deep neural networks, particularly convolutional neural networks (CNN) has allowed for automated recognition of different aspect of a painting such as objects, faces, or other specific motifs in paintings. The primary application of CNNs is to classify images through breaking down different aspects of what makes up an image.

When it comes to the use of algorithms alone in modifying digital art, texture synthesis over existing images and/or automated style modifications to images has been made since decades before. However the creation of new images through algorithms has witnessed great advancement only in the last five years. The use of Generative Adversarial Network (GAN) has been the biggest contributor in the AI art movement. Herein two competing networks act as a generator and discriminator. The former is fed with a sample of real images and subsequently generates images while the latter works to classify the resulting images as ‘real’ and ‘fake’; the fake in this being different variations of the sample input in the generator.

Contemporary AI generators such as DALL-E and Stable Diffusion and the lesser known Night Café involve the user just entering a text-based description, perhaps select a style if the software permits and generate images accordingly. These platforms use a transformer-based architecture that has models trained in recognising and subsequently using an extensive number of image-text pairs. The latest such model is Contrastive Language-Image Pre training model (CLIP) that is trained using 400 million image-text pairs.

The model has the capacity to mine images and text embeddings from a joint feature space and measure the similarity between them. The above-mentioned platforms use CLIP along with a combination of other modified models. Meanwhile the user or the ‘artist’, types a certain description and an image generates automatically. Depending on the degree to which the user is in there specifications and what level of style options the platform allows, the end image perhaps could be closer to what the user imagined. However for the most part, the resulting image will still has that random element to it.

AI art generation in action

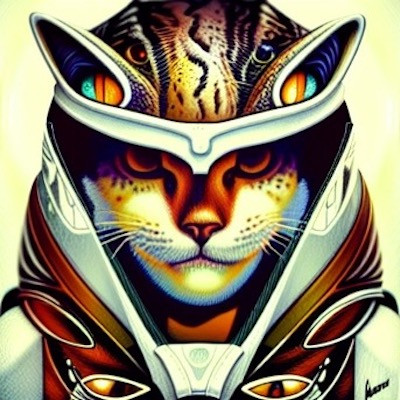

To demonstrate how AI applications create art, the following two images were created using a basic text to image AI generator called Night Cafe. The term “Intergalactic Cat” was entered and the portrait style option was selected.

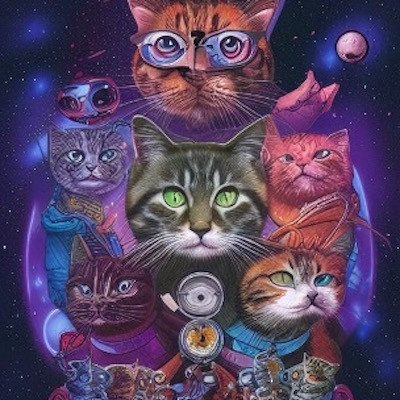

Keeping the same image, the style was changed from portrait to ‘Modern Comics” and the generator published the following image.

For the third image the program was reset, and the same text was entered and the second style option was selected. The following image came about.

As one can see the above images still follow text to image association in an objective since.

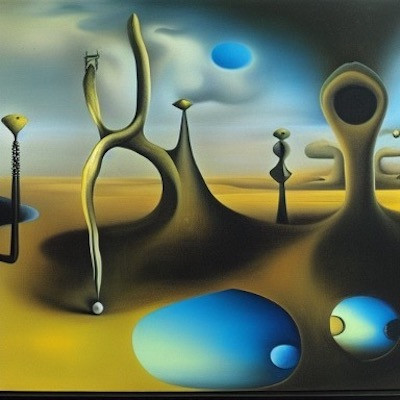

But what happens if you enter a phrase that could pertain to subjectivity from all ends. Typing the phrase “Buttercup Spice” and the phrase “Dystopian Faction”, and selecting the styles ‘Cosmic’ and “Salvador Dali’ presented the following images.

Credits and responsibility

Back in 2018 an artwork titled Portrait of Edmond de Belamy created using AI tools by a Paris based collective called Obvious sold for roughly half a million dollars at Christie’s auction house. Whether it was to garner hype or did the stakeholders actually believed so, the piece was touted as being solely AI generated. The whole campaign around Belamy essentially employed the use of anthropomorphic language with the motto being “creativity isn’t only for humans.” While it could definitely be argued that this campaign may have been responsible for the high price Belamy fetched, it goes on to argue that the piece is what it is due to the human actors involved. Even if you discount Obvious, if it weren’t for Ian Goodfellow, the creator of GAN, this piece wouldn’t have been possible. Then again attributing credit to Goodfellow for a singular piece could draw the equivalent with the inventor of camera getting credited for every photograph that has ever been taken as an atist.

But perhaps the most important piece of the puzzle is Robbie Barat whose code available on GitHub was used for the most part to create Belamy. Was the AI really autonomous in this endeavor? Would it be wrong to say that at the very least Obvious, Barat, and for argument’s sake the ‘AI’ could hold equal credits?

As expected the proceeds all went to Obvious- no pun intended with Barat not receiving even much acknowledgement. Despite extensive conversation for now when it comes to authorship, the credits for the most part and from a legal standpoint lie with the person producing it no matter how minimal the effort is. Case in point being AI art being awarded the first prize at a recent art completion at a Colorado State Fair. Jason Allen who went on to receive massive backlash on social media has still retained the winning cash prize with the judges maintain that the they would’ve made the same decision even if they knew that the art was made using AI beforehand.

An area of major concern that has led the likes of Getty Images to make preemptive efforts is the use of copyrighted images to train AI networks. Shlomit Yanisky Ravid PhD, an intellectual property law expert and professor at Yale and Fordham University in her extensive analysis on the subject Copyrightability of Artworks Produced by Creative Robots and Originality: The Formality-Objective Model, has gone on to argue that any efforts to address copyright challenges arising from AI based art works would require that the context and meaning of what constitutes as ‘original’ will need to be re-evaluated.

There already has been one instance where an AI artist has received US copyright recognition for their work. Ccording to a story published on ArsTechnica Kris Kashtanova created artwork for their graphic novel Zarya of the Dawn using Midjourney an Ai art generator. The main character in the novel shares an uncanny resemblance to actor Zendaya. This could be perhaps due to AI artistis often using celebrity names in the text feeder.

It’s all in perception

MIT researchers Ziv Epstien and Sydney Levine in 2020 had conducted a study that tried to understand how the viewer perceives AI art with respect to attributes and how this perception can be manipulated. In their paper ‘Who Gets Credit for AI-Generated Art?’ found that the anthropomorphicity can significantly influence the responsibility attributed to human stakeholders in an AI art endeavour. They found that the increased anthropomorphicity ends up decreasing the responsibility of the artist and increases it for the engineer. According to them, the issue of perceived credits is greatly dependent on the lexicon used to describe the technology.

Discussing the Belamy case, the participants of their study were of the opinion that Barrat should’ve been given their due credit.

Aside from attribution, if AI made art may still be the recipient of negative sentiment if compared with human made. Taking Colorado State Fair incident, as soon as it became public knowledge that Allen won for AI produced art, he ended up receiving backlash from the public with the aesthetic merits of the art itself being left out of discourse. Furry-focused art platform FurAffinity claims that it banned AI artwork on its platform since it was somewhat unfair to ‘human artists’. “AI and machine learning applications (DALL-E, Craiyon) sample other artists’ work to create content. That content generated can reference hundreds, even thousands of pieces of work from other artists to create derivative images,” said FurAffinity’s mods in an interview with The Verge. “Our goal is to support artists and their content. We don’t believe it’s in our community’s best interests to allow AI generated content on the site.”

Generally anthropomorphising AI in the creative space could also end up fueling bias against AI generated art. Undermining the human element in creative expression could cause AI art to be perceived as ‘superficial’ and ‘hollow’ even if an objective analysis suggests otherwise.

Ather Ahmed is a freelance writer. All information and facts provided are the sole responsibility of the writer.