The Internet used to be a pretty simple tool used to facilitate communication between people in different places. But over the years it has evolved into something much bigger: a commercial hub, a global chat room, and an oft-critiqued, never-ending encyclopedia of information and misinformation. People around the world use the Internet for just about everything, including major purchases. But the ease of making these transactions online is offset by the growing risk of personal information being leaked or misused.

During the early days of the Internet, sharing information candidly over the Internet didn’t seem like a big deal. Open access to the Internet has made many Internet users more aware of why having their personal information floating around could be dangerous. At the same time, more people are now online than ever before– sending multiple tweets and TikTok videos out into the world every day, and widening their Internet footprint with each Instagram photo they post. As they do all this, their data is collected and spread globally within seconds.

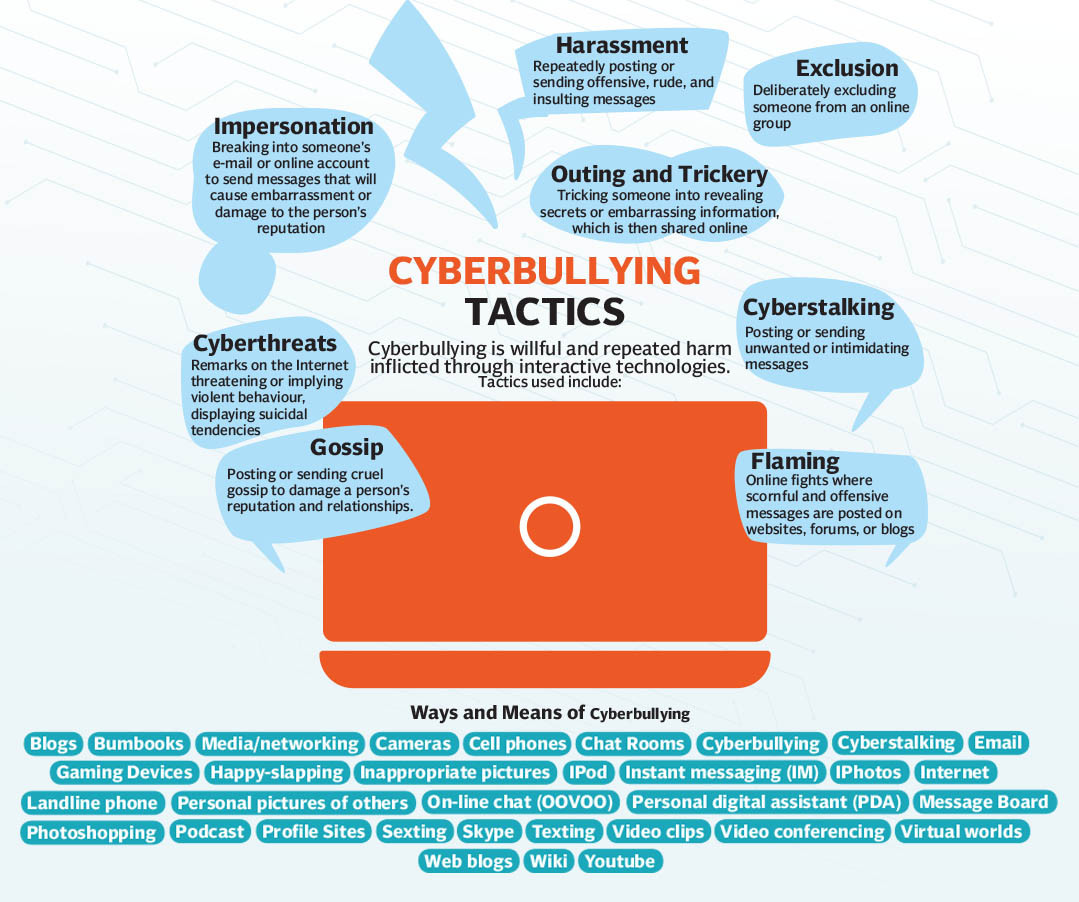

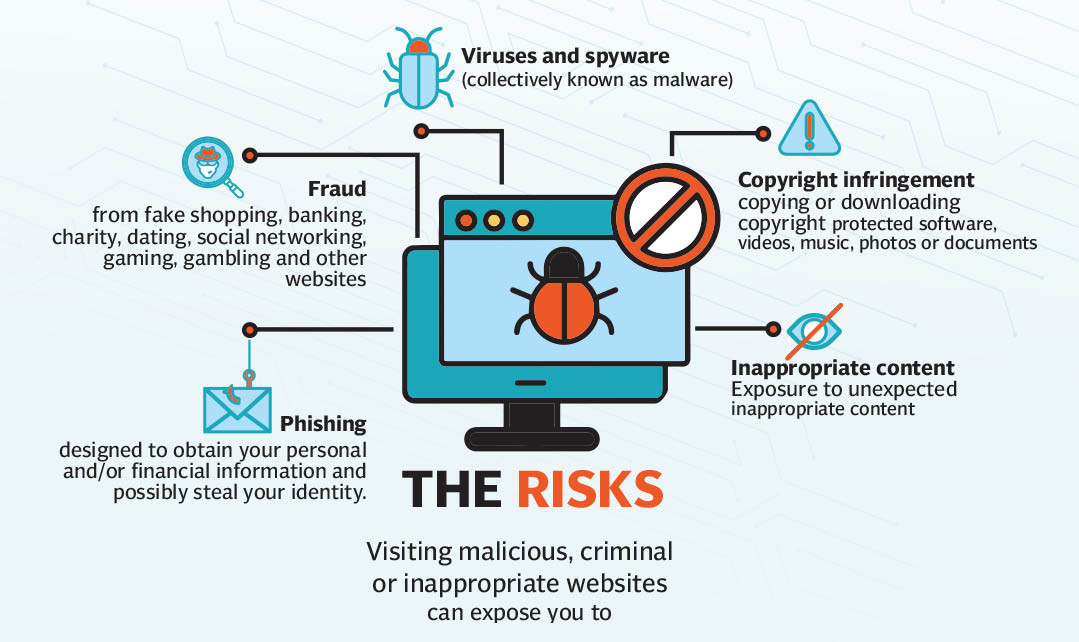

Online threats, which were once contained to malware, spam, and phishing, have grown exponentially as the Internet becomes more ubiquitous. Now, cyber-attacks, cyberstalking and cyber harassment are all things Internet users must be prepared to face. Misinformation also represents a major threat to Internet users who can fall prey to conspiracies, catfishing, and more.

Over the years, Pakistan has caught up with much of the world with its Internet usage. Now, almost every person in the country has access to the Internet. The threats internet users in Pakistan face are no different than the threats people face in other parts of the world, especially when it comes to cybersecurity. According to official figures, one million cyber-attacks have been launched in Pakistan since January 2021.

With the increased risks netizens face, major tech companies like Google, Facebook, and Twitter have been working to increase security for their users. TikTok, which has become a social media giant in record time, is also making efforts to make the internet a safer place and educate users about digital safety and responsibility.

In a shared attempt to address the modern day challenge of Internet safety, tech and social media companies have come together to celebrate Safer Internet Day. The 19th edition of this day with the theme ‘Together for a better internet,’ will take place this year on February 8.

What started as an initiative of the EU SafeBorders project in 2004, the Safer Internet Day was taken up by the Insafe, a European network of awareness centers promoting safer and better usage of internet, as one of its early undertakings in 2005. Since then, Safer Internet Day has grown beyond its traditional geographic zone and is now celebrated in approximately 200 countries and territories worldwide. This day calls upon all stakeholders to join together to make the internet a safer and better place for all – especially young people. From cyberbullying to misuse of digital identity, each year Safer Internet Day aims to raise awareness about emerging issues online.

TikTok, which has become wildly successful in Pakistan, has established Community Guidelines to try to curtail inappropriate content. Videos that do not comply with the guidelines will be either removed automatically through machine learning capabilities, or reviewed and then removed by TikTok’s content moderation team. This team includes moderators with local-language capabilities who not only understand multiple Pakistani languages but also the ability to discern cultural nuances.

The Community Guidelines include Youth Safety, which helps maintain a safe and supportive environment for children and teens. TikTok also joined the Technology Coalition – an organisation that works to protect children from online sexual exploitation and abuse – to deepen the company's evidence-based approach to intervention.

“We work every day to learn, adapt, and strengthen our policies and practices. We believe we perform best when we work together with industry peers and our users to further these efforts. In the second quarter of this year, we also revamped TikTok's Safety Center to offer more comprehensive guidelines to our users for their safety. We recognise the challenge of parenting in the digital age, and as we work to support families on our platform, we introduced the Guardian's Guide, a one-stop-shop to learn all about TikTok. This includes how to get started on the platform, our safety and privacy tools like our Family Pairing features, and additional resources to address common online safety questions," said Jiagen Eep, Head of Market Integrity and Enablement, Trust & Safety APAC at TikTok.

TikTok has also added new controls to contain inappropriate comments. Multiple comments can be deleted or reported at once, and accounts that post bullying or other negative comments can be blocked in bulk up to 100 times.

The company published a Bullying Prevention guide to help families learn how to identify bullying, as well as tools to counter bullying and provide help to bullying victims or bystanders. It also launched #CreateKindness, a global campaign and a creative video series aimed at raising awareness about online bullying – encouraging people to choose to be kind toward one another. This all comes together on the TikTok user interface for Pakistani users through the #AapSafeTohAppSafe campaign.

The company's statistics paint a telling picture of the extent of the internet safety problem. In the second quarter of the year in 2021, TikTok said it removed 81,518,334 videos globally for violating the above-mentioned community guidelines or terms of service. This makes less than 1% of all videos uploaded on TikTok. Of those videos, 93.0% were removed within 24 hours of being posted, 94.1% before a user reported them, and 87.5% when the video had zero views.

They also rolled out technology that automatically detects and removes some categories of violative content. As a result, 16,957,950 videos were removed automatically. Pakistan had the second highest number (9,851,404) of videos removed worldwide.

Of the videos that were removed, 14 per cent contained nudity and sexual activities, 6.8 percent violated harassment and bullying policies, 2.2 percent violated hateful behavior rules, 20.9 percent violated illegal activities and regulated Goods, 0.8 percent violated integrity and authenticity guidelines, 41.3 percent violated minor safety policies, 5.3 percent contained references to suicide, 5.3 percent talked about self-harm, and dangerous Acts, 7.7 percent and 1.1 percent of the videos violated violent and graphic content policies and violent extremism policies respectively.

Globally, 4,871,412 accounts were removed for violating TikTok guidelines. This included 11,205,597 accounts that were removed for potentially belonging to a person under the age of 13, which is less than 1 per cent of all accounts on TikTok.

From April-June, TikTok stopped 148,759,987 fake accounts from being created and also removed 8,542,037 videos posted by spam accounts. Additionally, the company identified

632,416,873 attempted follow requests by fake accounts and removed 71,935,583 fake accounts.

TikTok has also developed strict policies to protect users from fake, fraudulent, or misleading advertising. In the second quarter of 2021, 1,829,219 ads were rejected for violating advertising policies and guidelines.

TikTok is also releasing the transparency report for the Q3 in the week of ‘Safer Internet Day’ that shows how much the users are safe on the platform.

Dangerous Challenges

With the growing number of young users on every platform, the risk of young people's engagement with potentially harmful challenges and hoaxes has also increased. TikTok, a platform where different fun challenges are performed by the users, and to ensure that they are not exposed to the dangerous challenges, performs a check on the videos and either remove them or mark them with a warning.

They have also issued guidelines for the younger community so that whenever they see a challenge, they can decide either to perform or not. The guidelines ask the user to Stop, Think, Decide and then act whenever they see challenges. Stop and think if it is safe and authentic. If unsure, check with some adult or friends or check from some authoritative source online. They don't promote dangerous challenges and ask the user to see if they find it risky. They should stop as putting lives at risk is not worthy. They have also provided the option to report the video if they think it should not be on the platform.

According to a report released by TikTok into the impact of potentially harmful challenges and hoaxes, when asked to describe a recent online challenge, 48 per cent of teens said recent challenges they had seen were safe, categorizing them as fun or light-hearted, 32 per cent included some risk but were still safe, 14% were described as risky and dangerous. In comparison, 3% of online challenges were described as very dangerous. Just 0.3% of teens said they had taken part in a challenge they categorized as really dangerous.

The report also included that the teens use a range of methods to understand the risks that may be involved in online challenges before they participate, such as watching videos of others taking part in challenges, reading comments, and speaking to friends – 46 per cent said they want “good information on risks more widely” and "information on what is too.

Some challenges are hoaxes. A hoax is a lie intentionally planted to trick people into believing something that isn't true. The purpose of a malicious hoax is to spread fear and panic. The report indicated that 31 per cent of teens have felt a negative impact of internet hoaxes and, of those, 63 per cent said the negative impact was on their mental health. Around 56 per cent of parents said they wouldn't mention a hoax unless a teen had mentioned it first, and 37 per cent of parents felt hoaxes are challenging to talk about without prompting interest in them.

“We want our users to consider the risks associated with challenges and help them understand that if they're not sure about something, they can ask a guardian or caregiver. If they see hoaxes, we'd like them to feel confident to dismiss them and not let it impact their mental well-being,” said Jiagen Eep.

“We take our responsibility seriously to support and protect teens – giving them tools to stay in control, mitigating risks they might face, and building age-appropriate experiences – so they can safely make the most of what TikTok has to offer them. We believe it's important to listen to feedback, reflect, and explore how we can improve,” he added.

He further said that they don't want any of their community members to be exposed to content about challenges that may be harmful or dangerous. “Our safety team works to remove content and redirect hashtags and search results to our Community Guidelines to discourage such behavior. It's important to acknowledge that not only are the majority of challenges fun, but they can also be an important part of a teen's developmental journey. Platforms like TikTok should do everything to detect and remove harmful content,” he said.

“We know there's no room for complacency when it comes to keeping TikTok safe for our community. These are just some of the actions we're taking now based on our research findings, and we look forward to sharing more in the future,” he concluded.

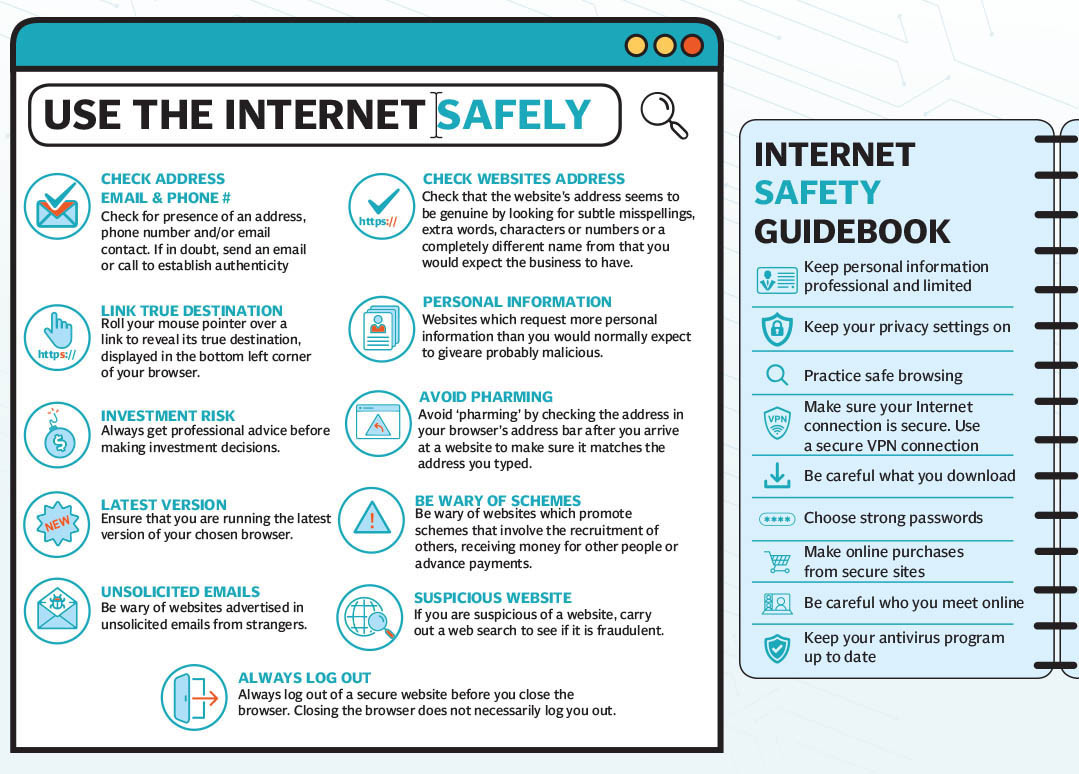

Google is one platform that is used by everyone globally. It saves passwords for different websites and stores personal data including photos and videos on their platforms. This requires strict security and safety checks to make sure the data remains safe.

Password is the first step to make sure your online accounts remain safe from hackers. People are using various platforms and it becomes difficult for the user to memorize the passwords. The experts suggest using different passwords for each platform. This is where the user either use simple passwords such as birthdays or the name of someone. This is where such practices often lead to a breach resulting in the loss of personal information and private photos and videos.

To counter this issue, Google has made Google Password Manager that runs a check on a whopping one billion passwords every day to make sure that your accounts are not compromised.

Google Password Manager is a built-in feature in Chrome, Android and the Google App and keeps your passwords protected using state-of-the-art technology. It allows one to set strong and unique passwords without compelling the user to remember or repeat it.

To make the password-entering process even smoother, Google enables the iOS and Android users to choose the Auto-fill saved password, not just on Google, but other apps too.

Google has also greatly emphasised on two-step verification over the years. According to the platform, the chances of users getting hacked significantly decrease when they have two-step verification turned on. The platform has announced that by the end of 2021, it enrolled around 150 million Google users in the list of accounts that have two-step verification turned on and would need two million content creators on YouTube to turn it on.

The platform also claims that more than two billion devices have built-in Google Smart Lock App. It has also announced to launch the new Google Identity Services. The feature uses tokens instead of passwords to let the users get access to their accounts on certain apps and websites.

Some of the applications have started using Google Authenticator app that generates a unique code every 15 seconds.

Google also gives some tips to the users to keep their accounts safe. Slow it down — Scammers often create a sense of urgency so that they can bypass your better instincts. Take your time and ask questions to avoid being rushed into a bad situation.

Spot check — Do your research to double-check the details you’re getting. If you get an unexpected phone call, hang up. If you get an email, instead of clicking on the link sent to you, you could go directly to your bank, telco or government agency's website and login from there.

Stop! Don't send — No reputable person or agency will ever demand payment on the spot. Often, scammers will insist that you pay them through gift cards—which are meant only to be given as a gift, not as payment under threat. So if you think the payment feels fishy, it probably is.

Google also works with developers in Pakistan, and is extremely aware of the challenges women face on the internet. Google’s Women Techmakers program, which recently brought online safety trainings for women together with Jigsaw, explores threats to open societies and builds technology to inspire scalable solutions, Women Techmakers has worked to bring online safety training to more and more women around the globe.

As a part of this program, the Women Techmaker ambassadors of Pakistan conducted eight online safety trainings and six ideathons to empower women to build solutions addressing online security concerns.

Social Media

If we talk about social media, which is widely used around the world, the risk of security is much higher and multiple factors of being target of the scam, bully, or hackers. According to Statista, people are spending 45 per cent more time on social media since March of 2020 globally, with a 17 per cent increase in the U.S.

Meta the parent company of Facebook, Instagram, and WhatsApp, the most used social media applications, introduced some features and tools that give people control over what they share, who they share it with, the content they see and experience, and who can contact them.

Every day, people around the world share things on these platforms that result in new and incredible ideas, opportunities, friendships and collaborations. At the same time, there is a responsibility that lies not only with the platforms but on its users too.

Facebook asks people to consider their audience when sharing on Facebook and be thoughtful about how and what to share. The features let the user decide who can see the content they share, and they have policies that prohibit hateful, violent or sexually explicit content.

Just like Google, Facebook also uses two-factor authentication through the Google Authentication app. They also let the user use Facebook’s Security Checkup tool to review apps and browsers where they are logged in to Facebook and get alerts when an account is logged in to from an unrecognised device.

Bullying and harassment are not allowed on Facebook or Instagram. But since everything can’t be prevented or caught, some tools help to control the experience. For example, all messages from a bully can be ignored or blocked entirely without that person being notified and users can also moderate comments on the posts.

Along with this, Facebook also lets the users control who can reach out to them or send messages and friend requests. They also allow the user to report about anything inappropriate or that makes them uncomfortable on Facebook by using the 'report' button. The teams work around the clock in over 50 languages and respond as quickly as possible.

While the steps that tech giants are taking to make the internet a safe place, it will be interesting to see how far it is willing to go to negate the constant threats of hackers and the likes. Every year, we see the number of online bullying cases in Pakistan but still, there are many cases that are not reported which encourages the hacker, bully or scammer to keep on making the internet unsafe.

All the features and tools that are available for the user to understand their responsibility of using the internet. Any wrong step or material can put not only their lives in danger but others too